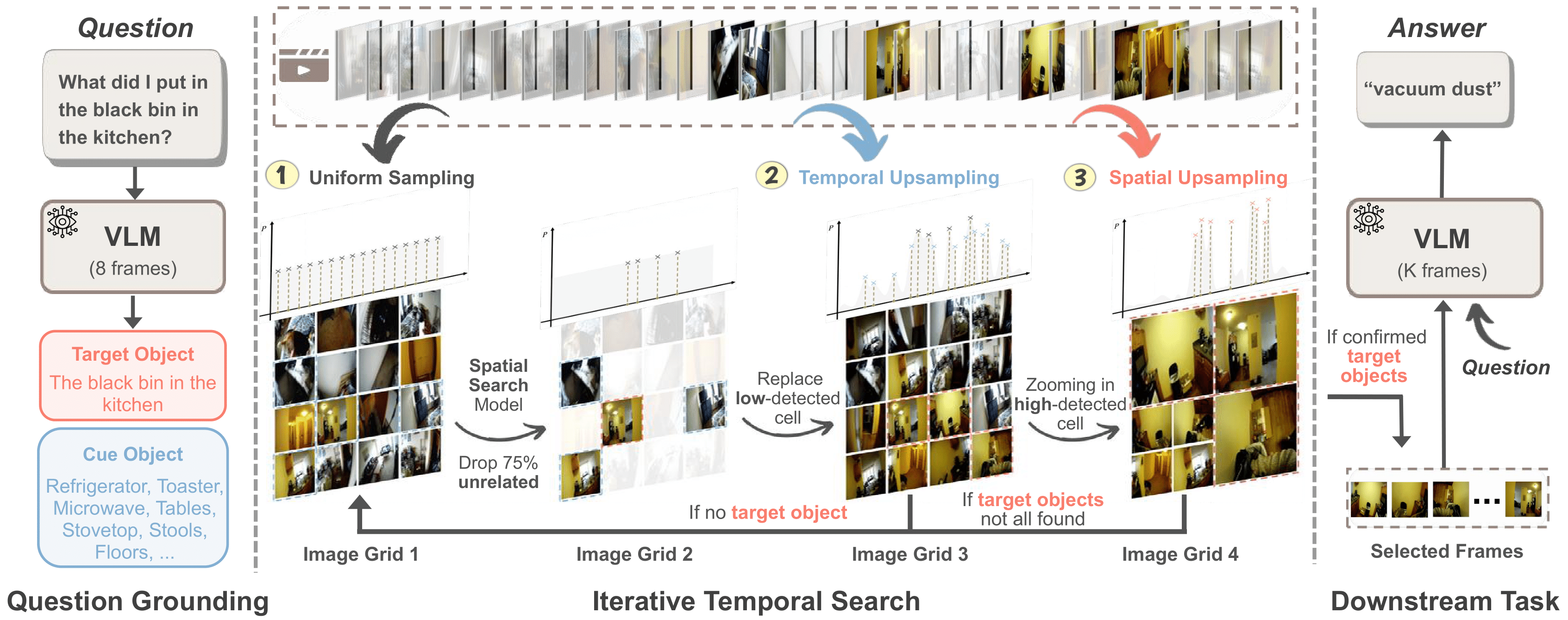

Method Overview

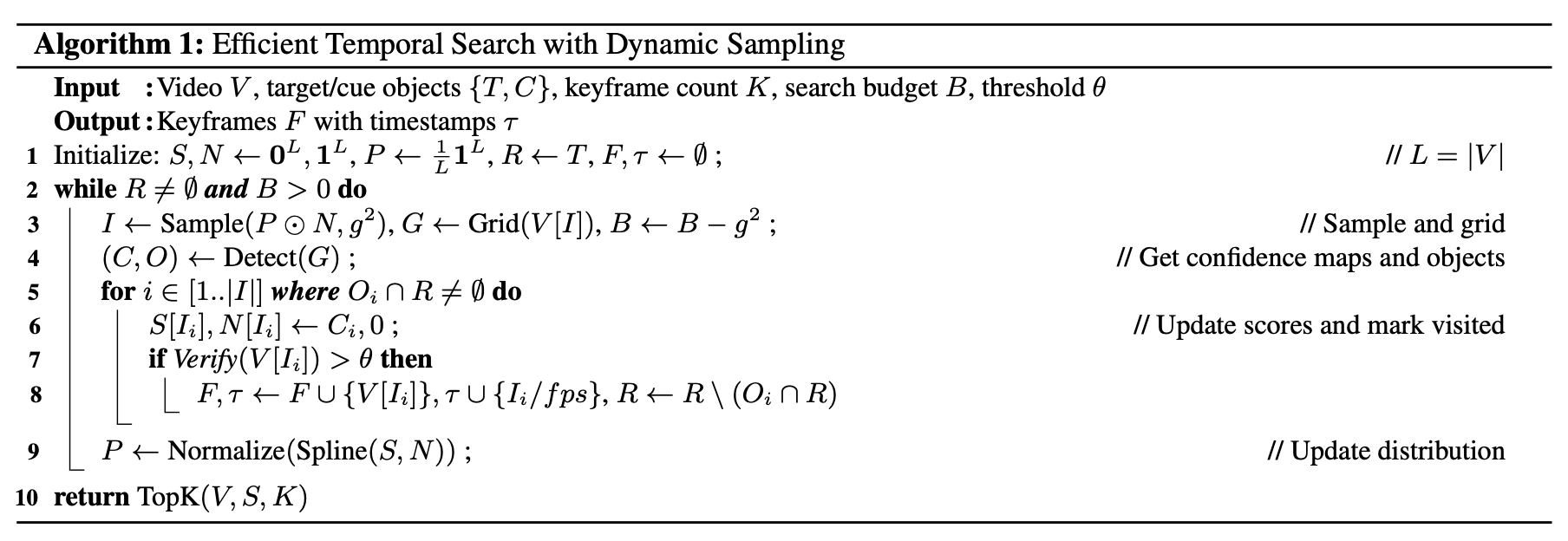

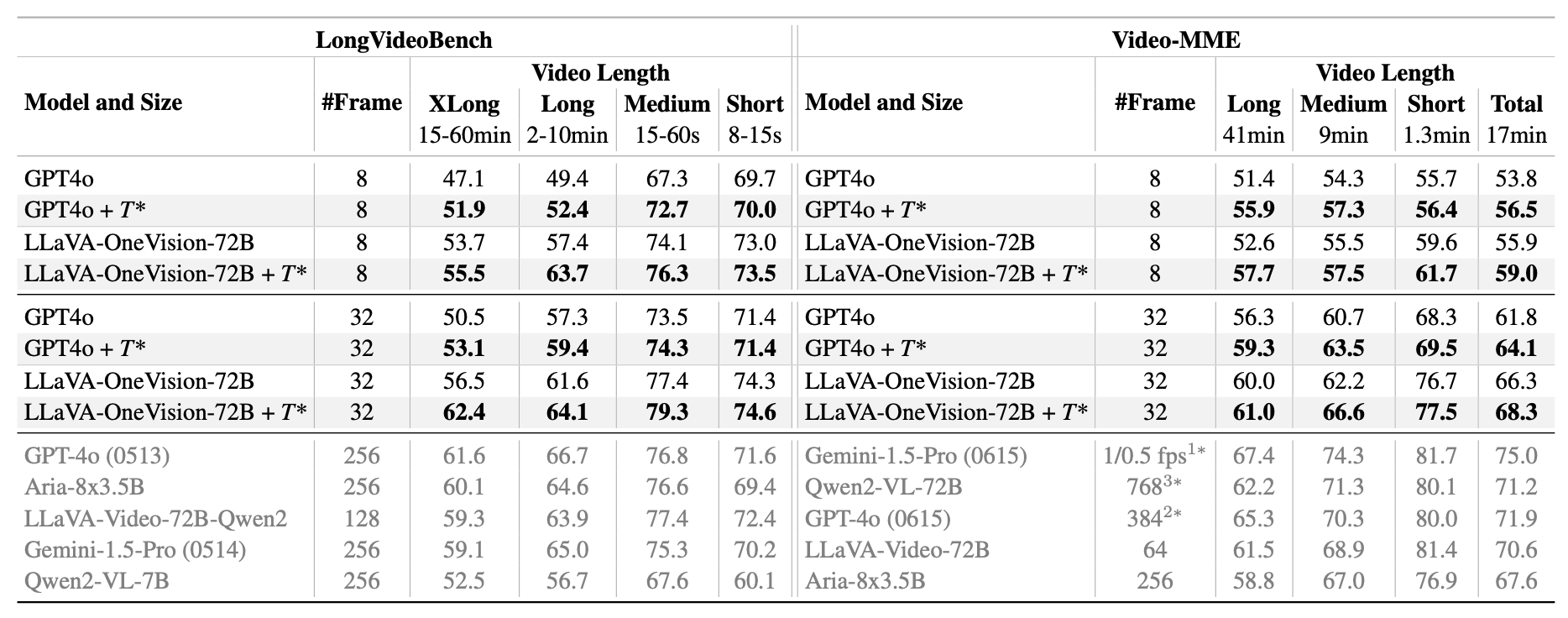

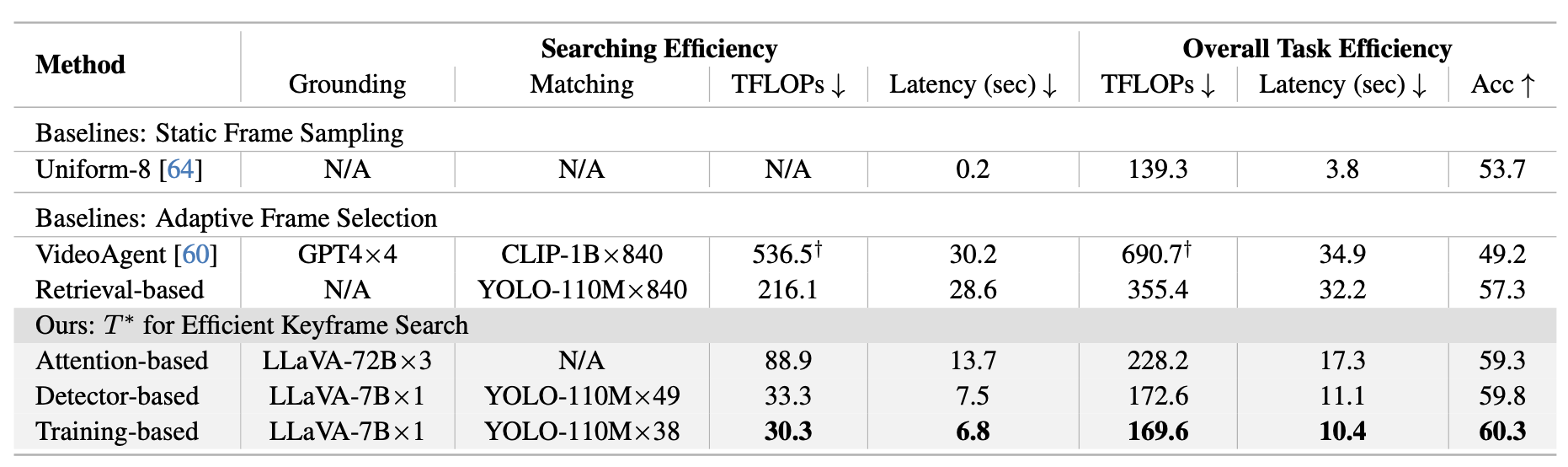

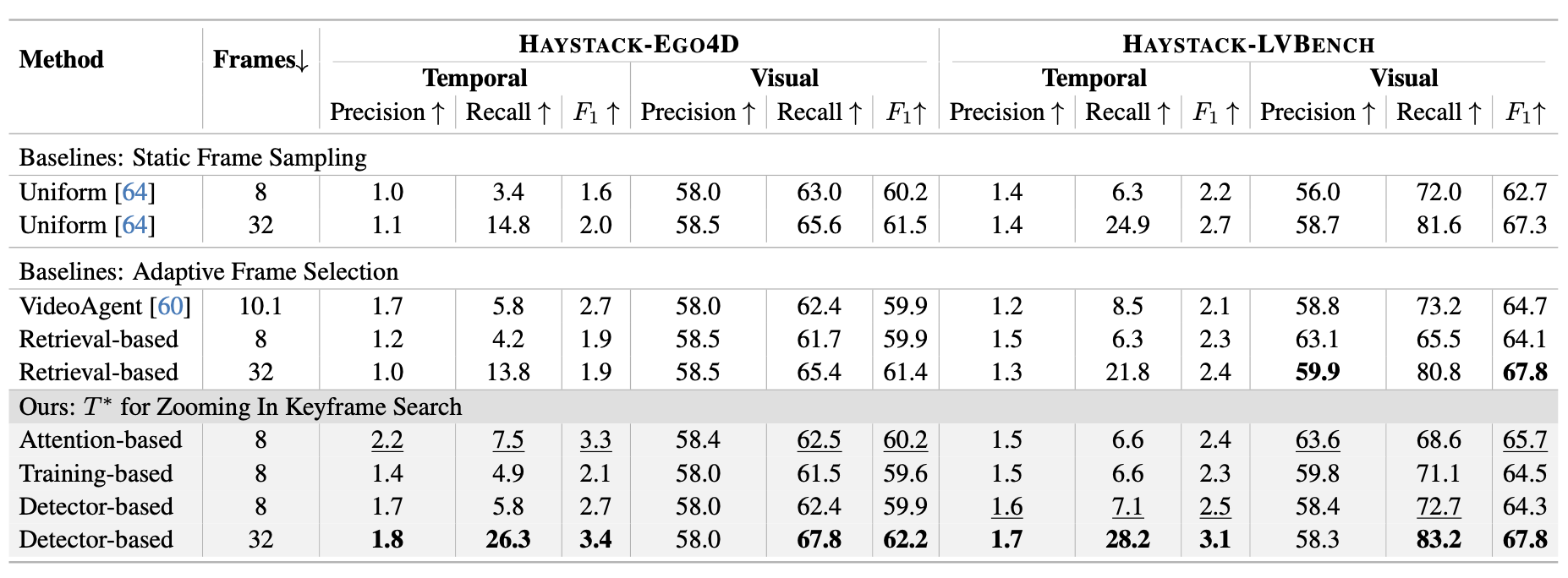

T* is an advanced temporal search framework designed to efficiently identify key frames relevant to specific queries. By transforming temporal search into spatial search, T* leverages lightweight object detectors and Visual Language Model (VLM) visual grounding techniques to streamline the process. T* demonstrates exceptional performance, both with and without additional training, making it a versatile and powerful tool for various applications.